ceph

简介

Ceph 是一个可靠地、自动重均衡、自动恢复的分布式存储系统。 根据场景划分可以将 Ceph 分为三大块

- 对象存储 : object

- 块设备存储 : block

- 文件系统服务 : file

核心组件

OSD

Object Storage Device,主要功能包括存储数据,处理数据的复制、恢复、回补、平衡数据分布,并将一些相关数据提供给 Ceph Monitor

MON

即 monitor

Ceph的监控器,主要功能是维护整个集群健康状态,提供一致性的决策,包含了 Monitor map ,即集群 map , monitor 本身不存储任何集群数据

Mgr

Ceph Manager 守护进程(ceph-mgr)负责跟踪运行时指标和 Ceph 集群的当前状态,包括存储利用率,当前性能指标和系统负载。

MDS

Ceph Metadata Server,主要保存的是 Ceph 的文件系统(File System)的元数据(metadata),不是必须安装,当需要使用 CephFS 的时候才会使用

rgw

对象存储

基础组件

rados

自身是一个完整的分布式对象存储系统,它具有可靠、智能、分布式等特性,Ceph的高可靠、高可拓展、高性能、高自动化都是由这一层来提供的,用户数据的存储最终也都是通过这一层来进行存储的,RADOS 可以说就是Ceph的核心,主要由两部分构成,分别是

OSD 和 Monitor

Librados

它是一个库,它允许应用程序通过访问该与 RADOS 系统进行交互,支持多种编程语言,比如 C、C++,Python 等

BD, RGW, CephFS 都可以归为上层应用接口

radosgw

RADOSGW 是一套基于当前流行的 RESTFUL 协议的网关,只有当使用对象存储时才会用到

rbd

RBD通过Linux内核客户端和QEMU/KVM驱动来提供一个分布式的块设备,可以理解为像linux的LVM一样,从Ceph的集群中划分出一块磁盘,用户可以直接在上面做文件系统和挂载目录

CephFs

通过 Linux 内核客户端和 fuse 来提供一个兼容 POSIX 的文件系统

当一些 linux 系统不支持 mount 命令或者需要更高级的操作时,会用到 ceph-fuse

rook

Rook 是一个自管理的分布式存储编排系统,可以为 Kubernetes 提供便利的存储解决方案。Rook 本身并不提供存储,而是在 kubernetes 和存储系统之间提供适配层,简化存储系统的部署与维护工作。目前, rook 支持的存储系统包括:Ceph、CockroachDB、Cassandra、EdgeFS、Minio、NFS 等

Rook 使用 Kubernetes 原语使 Ceph 存储系统能够在 Kubernetes 上运行。

Rook 组成

Operator:由一些CRD和一个All in one镜像构成,包含包含启动和监控存储系统的所有功能。Cluster:负责创建CRD对象,指定相关参数,包括ceph镜像、元数据持久化位置、磁盘位置、dashboard等等

部署 rook-ceph

k8s 1.15 以上 需要这个 crds.yaml

1 | wget https://raw.githubusercontent.com/rook/rook/release-1.5/cluster/examples/kubernetes/ceph/pre-k8s-1.16/crds.yaml |

使用 v1.5.9 版本主要流程

1 | git clone --single-branch --branch v1.5.9 https://github.com/rook/rook.git |

演示

拷贝 源文件,切换 v1.5.9 分支(当前最新版)1

[root@k8s01 ~]# git clone --single-branch --branch v1.5.9 https://github.com/rook/rook.git

切换到 ceph 目录下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23[root@k8s01 ~]# ls

ceph-client.yaml crds.yaml filesystem.yaml object-multisite.yaml pre-k8s-1.16

cluster-external-management.yaml create-external-cluster-resources.py flex object-openshift.yaml rbdmirror.yaml

cluster-external.yaml create-external-cluster-resources.sh import-external-cluster.sh object-test.yaml rgw-external.yaml

cluster-on-pvc.yaml csi monitoring object-user.yaml scc.yaml

cluster-stretched.yaml dashboard-external-https.yaml nfs-test.yaml object.yaml storageclass-bucket-delete.yaml

cluster-test.yaml dashboard-external-http.yaml nfs.yaml operator-openshift.yaml storageclass-bucket-retain.yaml

cluster-with-drive-groups.yaml dashboard-ingress-https.yaml object-bucket-claim-delete.yaml operator.yaml test-data

cluster.yaml dashboard-loadbalancer.yaml object-bucket-claim-retain.yaml osd-purge.yaml toolbox-job.yaml

common-external.yaml direct-mount.yaml object-ec.yaml pool-ec.yaml toolbox.yaml

common.yaml filesystem-ec.yaml object-external.yaml pool-test.yaml

[root@k8s01 ceph]# mv crds.yaml crds.yaml.bak # 使用 1.18版本

[root@k8s01 ceph]# wget https://raw.githubusercontent.com/rook/rook/release-1.5/cluster/examples/kubernetes/ceph/pre-k8s-1.16/

crds.yaml

--2021-03-26 09:28:26-- https://raw.githubusercontent.com/rook/rook/release-1.5/cluster/examples/kubernetes/ceph/pre-k8s-1.16/crds.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 21144 (21K) [text/plain]

Saving to: ‘crds.yaml’

crds.yaml 100%[====================================================================================================>] 20.65K --.-KB/s in 0.001s

2021-03-26 09:28:27 (30.0 MB/s) - ‘crds.yaml’ saved [21144/21144]

部署公共部分

1 | kubectl create -f crds.yaml -f common.yaml |

部署 operator.yaml # 需要配置好,operator 的配置在 ceph 安装后不能修改,否则 rook 会删除集群并重建。

修改 使用到的镜像1

2

3

4

5

6

7

8

9

10

11

12ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.6.2"

ROOK_CSI_REGISTRAR_IMAGE: "registry.aliyuncs.com/google_containers/csi-node-driver-registrar:v2.5.1"

ROOK_CSI_RESIZER_IMAGE: "registry.aliyuncs.com/google_containers/csi-resizer:v1.4.0"

ROOK_CSI_PROVISIONER_IMAGE: "registry.aliyuncs.com/google_containers/csi-provisioner:v3.1.0"

ROOK_CSI_SNtestHOTTER_IMAGE: "registry.aliyuncs.com/google_containers/csi-sntesthotter:v6.0.1"

ROOK_CSI_ATTACHER_IMAGE: "registry.aliyuncs.com/google_containers/csi-attacher:v3.4.0"

ROOK_CSI_NFS_IMAGE: "registry.aliyuncs.com/google_containers/nfsplugin:v4.0.0"

# grpc 端口指标

ROOK_CSI_ENABLE_GRPC_METRICS: "true"

# 开启设备自动发现

ROOK_ENABLE_DISCOVERY_DAEMON: "false"

额外的信息,默认不用修改1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23# 启用cephfs

ROOK_CSI_ENABLE_CEPHFS: "true"

# 开启内核驱动替换ceph-fuse

CSI_FORCE_CEPHFS_KERNEL_CLIENT: "true"

# 可以设置NODE_AFFINITY 来指定csi 部署的节点

# 我把plugin 和 provisioner分开了,具体调度方式看你集群资源。

CSI_PROVISIONER_NODE_AFFINITY: "app.rook.role=csi-provisioner"

CSI_PLUGIN_NODE_AFFINITY: "app.rook.plugin=csi"

#修改metrics端口,可以不改,我因为集群网络是host,为了避免端口冲突

# Configure CSI CSI Ceph FS grpc and liveness metrics port

CSI_CEPHFS_GRPC_METRICS_PORT: "9491"

CSI_CEPHFS_LIVENESS_METRICS_PORT: "9481"

# Configure CSI RBD grpc and liveness metrics port

CSI_RBD_GRPC_METRICS_PORT: "9490"

CSI_RBD_LIVENESS_METRICS_PORT: "9480"

# 修改rook镜像,加速部署时间

image: registry.aliyuncs.com/google_containers/rook/ceph:v1.5.1

# 指定节点做存储

- name: DISCOVER_AGENT_NODE_AFFINITY

value: "app.rook=storage"

# 开启设备自动发现

- name: ROOK_ENABLE_DISCOVERY_DAEMON

value: "true"

还有更多配置,按需修改1

2

3[root@k8s01 ceph]# kubectl apply -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

部署 cluster.yaml

1 | [root@k8s01 ceph]# kubectl apply -f cluster.yaml |

部署 toolbox.yaml

toolbox 添加如下配置,方便操作1

2

3

4

5securityContext:

privileged: true

readOnlyRootFilesystem: false

runAsUser: 0

runAsGroup: 0

生成配置文件1

2

3

4

5[root@k8s01 ceph]# kubectl apply -f toolbox.yaml

deployment "rook-ceph-tools" unchanged

[root@k8s01 ceph]# kubectl exec -it $(kubectl get pod -n rook-ceph -l app=rook-ceph-tools -o=jsonpath='{.items[0].metadata.name}') -n rook-ceph -- ceph health

HEALTH_OKHEALTH_OK

在 node 节点上使用 部署到宿主机需要对应 ceph 版本以及 操作系统版本

当前用的 centos-9,CEPH-17.2.51

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20# cat > /etc/yum.repos.d/ceph.repo <<-EOF

[ceph]

name=ceph

baseurl=http://mirrors.aliyun.com/ceph/rpm-17.2.5/el9/x86_64/

gpgcheck=0

priority=1

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.aliyun.com/ceph/rpm-17.2.5/el9/noarch/

gpgcheck=0

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-17.2.5/el9/SRPMS

gpgcheck=0

priority=1

EOF

安装1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24[root@k8s-254 ~]# yum install -y ceph-common

Error:

Problem: conflicting requests

- nothing provides liboath.so.0()(64bit) needed by ceph-common-2:17.2.5-0.el9.x86_64

- nothing provides liboath.so.0(LIBOATH_1.2.0)(64bit) needed by ceph-common-2:17.2.5-0.el9.x86_64

- nothing provides libtcmalloc.so.4()(64bit) needed by ceph-common-2:17.2.5-0.el9.x86_64

- nothing provides liboath.so.0(LIBOATH_1.10.0)(64bit) needed by ceph-common-2:17.2.5-0.el9.x86_64

- nothing provides libthrift-0.14.0.so()(64bit) needed by ceph-common-2:17.2.5-0.el9.x86_64

(try to add '--skip-broken' to skip uninstallable packages or '--nobest' to use not only best candidate packages)

# 需要下载依赖文件 https://centos.pkgs.org/ 这个网站查询, 然后再进行安装

# wget https://dl.fedoraproject.org/pub/epel/9/Everything/x86_64/Packages/l/liboath-2.6.7-2.el9.x86_64.rpm

# wget https://dl.fedoraproject.org/pub/epel/9/Everything/x86_64/Packages/g/gperftools-libs-2.9.1-2.el9.x86_64.rpm

# wget https://dl.fedoraproject.org/pub/epel/9/Everything/x86_64/Packages/l/libunwind-1.6.2-1.el9.x86_64.rpm

# wget https://dl.fedoraproject.org/pub/epel/9/Everything/x86_64/Packages/t/thrift-0.14.0-7.el9.x86_64.rpm

[root@k8s01 ~]# ceph -s

2021-03-29 13:29:43.244 7f4cbd2cd700 -1 Errors while parsing config file!

2021-03-29 13:29:43.244 7f4cbd2cd700 -1 parse_file: cannot open /etc/ceph/ceph.conf: (2) No such file or directory

2021-03-29 13:29:43.244 7f4cbd2cd700 -1 parse_file: cannot open /root/.ceph/ceph.conf: (2) No such file or directory

2021-03-29 13:29:43.244 7f4cbd2cd700 -1 parse_file: cannot open ceph.conf: (2) No such file or directory

Error initializing cluster client: ObjectNotFound('error calling conf_read_file',)

需要使用 toolbox 中 pod 的配置文件

进入 pod 内1

2

3

4

5

6

7

8

9

10[root@k8s01 /]# cat /etc/ceph/ceph.conf

[global]

mon_host = 172.20.40.107:6790,172.20.40.173:6790,172.20.40.249:6790

[client.admin]

keyring = /etc/ceph/keyring

[root@test249 /]# cat /etc/ceph/keyring

[client.admin]

key = AQCl+05gXxVmKRAA56z5/ge+4/h1pPPjLTHRgg==

在 node 节点

写入同样的文件, 然后验证

如果是在集群内

1 | kubectl exec -it $(kubectl get pod -n rook-ceph -l app=rook-ceph-tools -o=jsonpath='{.items[0].metadata.name}') -n rook-ceph -- cat /etc/ceph/ceph.conf | tee /etc/ceph/ceph.conf |

手动操作1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21[root@test173 ~]# vi /etc/ceph/ceph.conf

[root@test173 ~]# vi /etc/ceph/keyring

[root@test173 ~]# ceph -s

cluster:

id: 3d90ed67-7f00-47ae-ae3f-dbdd837c607c

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,d

mgr: a(active)

mds: test-1/1/1 up {0=test-a=up:active}, 1 up:standby-replay

osd: 4 osds: 3 up, 3 in

data:

pools: 3 pools, 300 pgs

objects: 170.7 k objects, 79 GiB

usage: 240 GiB used, 1.2 TiB / 1.4 TiB avail

pgs: 300 active+clean

io:

client: 5.8 KiB/s rd, 11 KiB/s wr, 2 op/s rd, 2 op/s wr

ceph 界面

执行这个文件 dashboard-external-http.yaml

1 | # cat dashboard-external-http.yaml |

执行完示例1

2

3

4

5

6

7

8

9

10[root@k8s01 ceph]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

csi-cephfsplugin-metrics ClusterIP 10.105.91.163 <none> 8080/TCP,8081/TCP 159m

csi-rbdplugin-metrics ClusterIP 10.104.68.21 <none> 8080/TCP,8081/TCP 159m

rook-ceph-mgr ClusterIP 10.108.132.13 <none> 9283/TCP 157m

rook-ceph-mgr-dashboard ClusterIP 10.101.67.94 <none> 7000/TCP 157m

rook-ceph-mgr-dashboard-external-http NodePort 10.68.18.182 <none> 7000:32070/TCP 160m

rook-ceph-mon-a ClusterIP 10.96.153.68 <none> 6789/TCP,3300/TCP 159m

rook-ceph-mon-b ClusterIP 10.109.178.82 <none> 6789/TCP,3300/TCP 158m

rook-ceph-mon-c ClusterIP 10.104.162.214 <none> 6789/TCP,3300/TCP 157

账户 admin, 获取密码

1 | [root@k8s01 ceph]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo |

直接修改密码

进入 rook-ceph-tool 容器内1

2echo 'MyPaawo!d' > /tmp/pass.txt

ceph dashboard ac-user-set-password admin -i /tmp/pass.txt

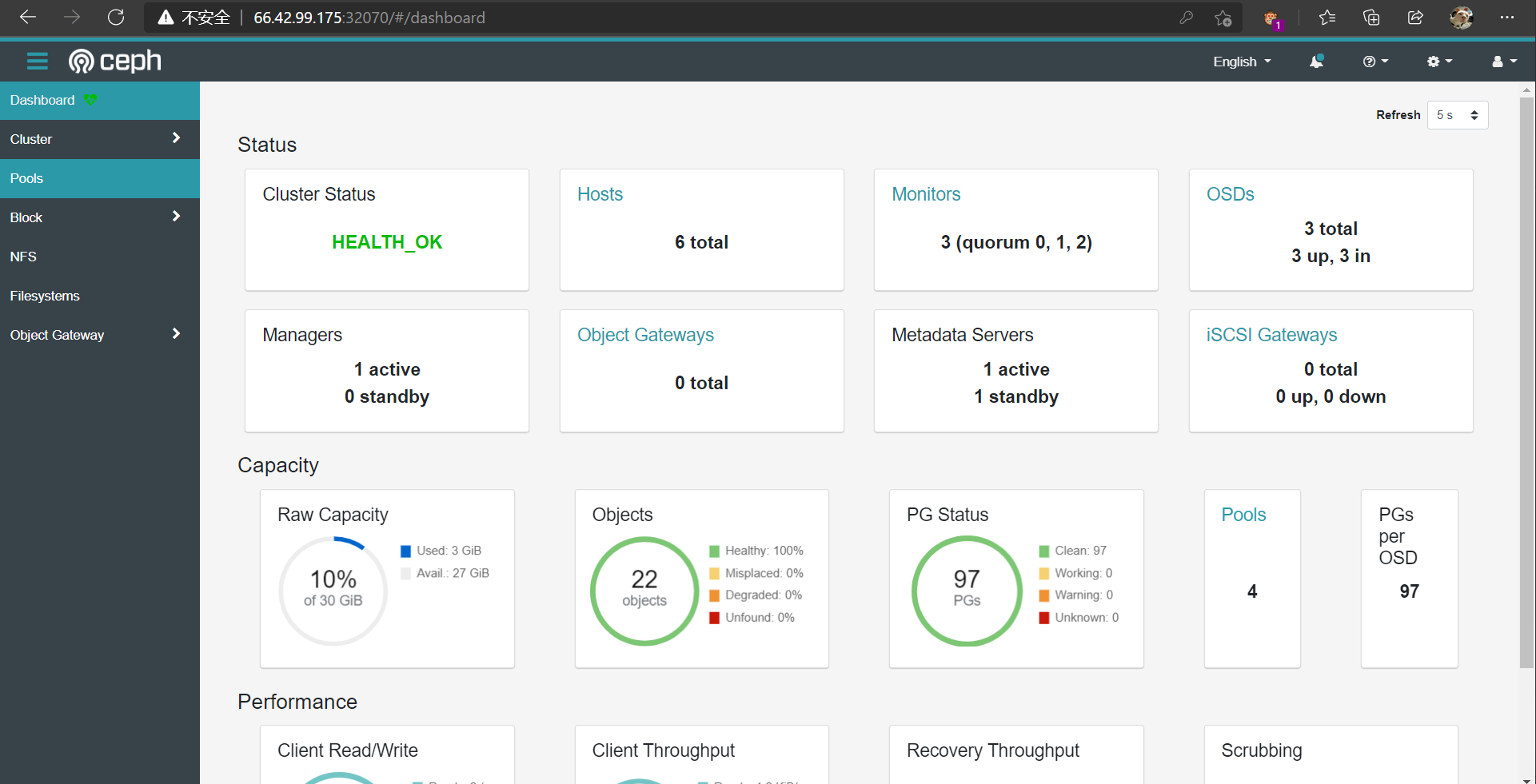

登陆后如图所示

块存储 : 创建一个 pod 使用的块存储

对象存储 : 创建一个在 k8s 集群内部和外部都可以访问的对象存储

共享文件系统 : 创建要在多个 pod 之间共享的文件系统

rbd io 统计信息 enableRBDStats: true

1 | apiVersion: ceph.rook.io/v1 |

改为 nbd

1 | --- |

遇到的问题

1 |

|

1 | W0908 03:00:16.166815 1 client_config.go:617] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work. |

osd 的job completed ,但是 osd 未创建1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

202023-09-22 10:08:54.554152 I | cephosd: skipping device "sda1" with mountpoint "boot"

2023-09-22 10:08:54.554156 I | cephosd: skipping device "sda2" because it contains a filesystem "LVM2_member"

2023-09-22 10:08:54.554158 I | cephosd: skipping device "dm-0" with mountpoint "rootfs" 2023-09-22 10:08:54.554160 I | cephosd: skipping device "nvme0n1" because it contains a filesystem "ceph_bluestore"

2023-09-22 10:08:54.561332 I | cephosd: configuring osd devices: {"Entries":{}}

2023-09-22 10:08:54.561363 I | cephosd: no new devices to configure. returning devices already configured with ceph-volume.

2023-09-22 10:08:54.561509 D | exec: Running command: stdbuf -oL ceph-volume --log-path /tmp/ceph-log lvm list --format json

2023-09-22 10:08:54.856867 D | cephosd: {}

2023-09-22 10:08:54.856895 I | cephosd: 0 ceph-volume lvm osd devices configured on this node

2023-09-22 10:08:54.856912 D | exec: Running command: stdbuf -oL ceph-volume --log-path /tmp/ceph-log raw list --format json

2023-09-22 10:08:55.280026 D | cephosd: {

"ae6b3a5c-2425-4433-b185-06ce0557ccdc": {

"ceph_fsid": "676d13b0-9985-4a3c-a989-7b299aff885a",

"device": "/dev/nvme0n1",

"osd_id": 1,

"osd_uuid": "ae6b3a5c-2425-4433-b185-06ce0557ccdc",

"type": "bluestore"

}

}

有残留信息 skipping device "nvme0n1" because it contains a filesystem "ceph_bluestore" ,重新格式化这个磁盘

卸载

删除 ceph 集群前,请先清理相关 pod

1 | kubectl delete -n rook-ceph cephblockpool replicapool |

所有主机

1 | rm -rf /var/lib/rook/* # 按 cluster.yaml 配置修改 |

ns 为 rook-ceph1

2

3

4

5

6

7

8

[root@test-173 ~]# kubectl get ns |grep Terminating

rook-ceph Terminating 13h

[root@test-249 ~]# kubectl api-resources --verbs=list --namespaced -o name | xargs -n 1 kubectl get --show-kind --ignore-not-found -n rook-ceph

NAME ACTIVEMDS AGE

cephfilesystem.ceph.rook.io/test 1 34m

发现此 crds 无法删除

1 | kubectl patch crds cephfilesystems.ceph.rook.io -p '{"metadata":{"finalizers": []}}' --type=merge |

然后顺利删除

1 | [root@test-249 ~]# kubectl get ns |grep Terminating |

或者1

2

3[root@hdp01 examples]# kubectl api-resources --verbs=list --namespaced -o name | xargs -n 1 kubectl get --show-kind --ignore-not-found -n rook-ceph

edit 资源,改 finalizers: []

升级

小版本升级

eg: 1.5.0 升级到 1.5.11

2

3

4git clone --single-branch --branch v1.5.1 https://github.com/rook/rook.gits

cd $YOUR_ROOK_REPO/cluster/examples/kubernetes/ceph/

kubectl apply -f common.yaml -f crds.yaml

kubectl -n rook-ceph set image deploy/rook-ceph-operator rook-ceph-operator=rook/ceph:v1.5.1

跨版本升级

1.4.0 升级到 1.5.1

1 | # Parameterize the environment |

升级 rook

1 | git clone --single-branch --branch v1.5.1 https://github.com/rook/rook.gits |

升级 ceph

1 | NEW_CEPH_IMAGE='ceph/ceph:v15.2.5' |

集群修复

误删集群,但没有清空磁盘和 /var/lib/rook 下的文件,可以进行恢复.

rook-ceph 只有monitor和osd服务是有状态的。恢复ceph集群只需要恢复这个两个服务就可以完成

有 etcd 备份时 参考前文 k8s遇到的问题 不小心删除掉了重要的 namespaces (不推荐,影响较大)

通过 恢复 mon 信息,重装集群

获取账户和 secret1

2

3

4

5

6

7

8# cat /var/lib/rook/rook-ceph/client.admin.keyring

[client.admin] # 使用的用户 client.admin

key = AQAY8B9koAamJBAAnSGyyH3LjmqwsPRkei1tmQ== # client.admin 的 keyring

ctest mds = "allow *"

ctest mon = "allow *"

ctest osd = "allow *"

ctest mgr = "allow *"

获取 endpoints 信息1

2

3

4

5

6

7

8

9# cat /var/lib/rook/rook-ceph/rook-ceph.config

[global]

fsid = 8ca801df-f763-4682-b23c-2c6f4b71b392 # 集群id

mon initial members = a b c # mon 个数,为 3

mon host = [v2:192.168.2.133:3300,v1:192.168.2.133:6789],[v2:192.168.2.132:3300,v1:192.168.2.132:6789],[v2:192.168.2.131:3300,v1:192.168.2.131:6789] # 默认不是 host 的 IP ,需要改成 HOSTIP , 可以看 ls /var/lib/rook 192.168.2.131 当前节点为 mon-c ,一一对应修改

[client.admin]

keyring = /var/lib/rook/rook-ceph/client.admin.keyring

上诉的信息需要 base64 加密,加密后填入

1 | # echo -n "AQAY8B9koAamJBAAnSGyyH3LjmqwsPRkei1tmQ==" | base64 -i - |

当 rook-ceph 还可以通过 k8s 操作时,也可以直接 查看

获取 rook-ceph-mon-endpoints1

2

3

4

5

6

7

8

9

10

11

12

13

14

15# kubectl get configmtest -n rook-ceph rook-ceph-mon-endpoints -o yaml > rook-ceph-mon-endpoints.yaml # 修改后文件如下

apiVersion: v1

data:

csi-cluster-config-json: '[{"clusterID":"rook-ceph","monitors":["192.168.2.133:6789","192.168.2.132:6789","192.168.2.131:6789"],"namespace":""}]'

data: a=192.168.2.133:6789,b=192.168.2.132:6789,c=192.168.2.131:6789

mapping: '{"node":{"a":{"Name":"192.168.2.133","Hostname":"192.168.2.133","Address":"192.168.2.133"},"b":{"Name":"192.168.2.132","Hostname":"192.168.2.132","Address":"192.168.2.132"},"c":{"Name":"192.168.2.131","Hostname":"192.168.2.131","Address":"192.168.2.131"}}}'

maxMonId: "2" # 我有 3个 mon 这里就是 2

kind: ConfigMap

metadata:

finalizers:

- ceph.rook.io/disaster-protection

name: rook-ceph-mon-endpoints

namespace: rook-ceph

ownerReferences: null

获取 rook-ceph-mon 信息1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16kubectl get secrets -n rook-ceph rook-ceph-mon -o yaml > rook-ceph-mon.yaml # 修改后如下

apiVersion: v1

data:

ceph-secret: QVFBWThCOWtvQWFtSkJBQW5TR3l5SDNMam1xd3NQUmtlaTF0bVE9PQ==

ceph-username: Y2xpZW50LmFkbWlu

fsid: OGNhODAxZGYtZjc2My00NjgyLWIyM2MtMmM2ZjRiNzFiMzky

mon-secret: QVFBWThCOWtBWnB1SXhBQXYyUWxvSFU1aTZiZStYQTZLcEFxMEE9PQ==

kind: Secret

metadata:

finalizers:

- ceph.rook.io/disaster-protection

name: rook-ceph-mon

namespace: rook-ceph

ownerReferences: null

type: kubernetes.io/rook

创建集群1

kubectl create -f crds.yaml -f common.yaml -f operator.yaml

等 operator 正常运行,生成原来的配置信息

1 | kubectl create -f rook-ceph-mon.yaml -f rook-ceph-mon-endpoints.yaml |

然后按找安装过程再 执行 cluster.yaml tools.yaml 等后续文件, 查看正常,数据也恢复正常

1 | [root@imwl-02 ~]# kubectl get pod -n rook-ceph |

查看文件

1 | [root@imwl-02 ~]# df -h |grep /mnt |